Since it began, SciCast has let you make conditional edits when we have previously linked the questions. But if you wanted to link questions, you had to email us. No more! Now users can add links.

Continue reading

Users who have LIKED this post:

Since it began, SciCast has let you make conditional edits when we have previously linked the questions. But if you wanted to link questions, you had to email us. No more! Now users can add links.

Users who have LIKED this post:

We’re looking for forecasting tips from our top SciCasters to include in our training lessons and we would love to hear from you. What tips can you share with fellow forecasters? Possible topic areas include:

and more…

Feel free to comment here or send a message to [email protected]. Thank you!

We have started adding “Accuracy” numbers to emails. For example:

What does that mean? The short answer is that it’s a transform of the familiar Brier score, which we have mentioned in several blog posts. Where the Brier measures your error (low is good), Accuracy measures your success (high is good). This is more intuitive … except when it’s not.

Users who have LIKED this post:

If you would like to start forecasting but you’re not sure how to begin or you’re a veteran SciCaster ready to take your forecasting to the next level, we’re here to help with training. The SciCast course, Understanding SciCast, is now available. Login to SciCast Predict and click “Training.”

We’re awarding Amazon Gift Cards to the first 180 SciCasters who complete the CHAMPS and KNOW quizzes with 100% accuracy on the first try. Badges are earned for Lesson completion with a score of 75% on each quiz. Continue reading

Prediction market performance can be assessed using a variety of methods. Recently, SciCast researchers have been taking a closer look at the market accuracy, which is measured in a variety of ways. A commonly used scoring rule is the Brier score that functions much like squared error between the forecasts and the outcomes on questions.

Over the past 3 weeks we have more than tripled the number of users on SciCast. Hooray! But with growth comes growing pains, one of which is an increased amount of spam in our comments. Best as they try, certain people just can’t resist writing complete nonsense in to a discussion thread. So we’ve introduced a way for the better citizens of SciCast to mark a comment as spam:

A comment must be marked as spam a certain number of times by multiple people. If it is, it will be hidden from the comment thread. So please do your duty and if you see something that’s clearly spammy, mark it. If you’re unsure, ask us about it.

Another change to commenting is the requirement to have verified your email address before you can comment. Currently we do NOT require an email address to sign up and begin participating in SciCast. But a quick survey of “spam” discussion items revealed that many of those comments were tracing back to people who did not provide an email address as part of their registration. We still aren’t requiring a valid email address to register, but we are to make a comment. We hope this too cuts down on spammy comments.

An even more substantive change we’re excited about is “recurring forecasts.” If you ask SciCast to, we will make a forecast for you once a day in a given question based on an initial forecast. So if you use Power Mode to raise the chance to 75%, you can spend a certain number of points to raise it to 75% whenever the once-a-day check finds it below that threshold. If you used Safe Mode, we’ll simply make that same forecast for you for 7 days in a row (or for however many days you specify) using the usual Safe Mode rules.

Here’s an example:

Let’s say I made a forecast that it’s “Unlikely (20%-40%)” that “The same machine will hold the #1 rank on the Top500 and Graph500 lists in August 2014.” Once I’ve completed the forecast, I can ask SciCast to make the same forecast for me once a day for a week, by checking the box.

If I’m forecasting in power mode, each edit can be much bigger (and more expensive), so instead of #days, I specify a total budget. The power-mode forecast could use it all in one day, or it could last for months, depending on how much each edit costs:

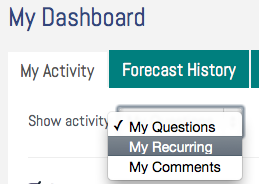

The values “1 week” and “300 points” are just defaults. I can use “My Dashboard” for more control. Here “My Dashboard -> My Activity -> My Questions” shows me the most recent forecast I made on each question, and offers me a customizable way to make it recur.

In the figure above, my last Adelgid forecast used Power Mode, so it offers me the option to make “>78%” a recurring edit, for however many points I want. My last Graph500 forecast used Safe Mode, so it offers me the option of a recurring Safe Mode forecast, for a specifiable #days (currently 1-7 only).

Recurring forecasts are tracked in your forecast history and marked as such so you can keep track of what was the original forecast and what was recurring. Use “My Dashboard -> My Activities -> My Recurring” to see and cancel them.

Please let us know how you like these new features. We’re continuing to work on a better commenting system and even more powerful and efficient forecasting tools, and this release is an important first step down that path.

The following shows an example of a Scaled or Continuous question:

Instead of estimating the chance of a particular outcome, you are asked to forecast the outcome in natural units like $. Forecasts moving the estimate towards the actual outcome will be rewarded. Those moving it away will be penalized. As with probability questions, moving toward the extremes is progressively more expensive: we have merely rescaled the usual 0%-100% range and customized the interface.

Forecasters frequently want to know why their forecast had so much (or so little) effect. For example, Topic Leader jessiet recently asked:

I made a prediction just now of 10% and the new probability came down to 10%. That seems weird- that my one vote would count more than all past predictions? I assume it’s not related to the fact that I was the question author?

The quick answer is that she used Power mode, which is our market interface, and that’s how markets work: your estimate becomes the new consensus. Sound crazy? Note that markets beat out most other methods for the past three years of live geopolitical forecasting on the IARPA ACE competition. For two years, we ran one of those markets, before we switched to Science & Technology. So how can this possibly work? Read on for (a) How it works, (b) Why you should start with Safe mode, (c) The scoring rule underneath, and (d) An actual example.

By Dr. Ken Olson

Have you explored Related Forecasts yet? The SciCast Team frequently adds new questions and new links between questions. The links create clusters of related questions supporting “what-if” forecasts. We will be showcasing some of those here on the blog.

For example, below we see part of the network linking three clusters of questions: Arctic sea ice, the GBO-4 biodiversity reports, and the Pacific sardine population.

An arc (an arrow leading from one question to another) indicates that we think the outcome of one question might influence the other. Questions connected by arcs will usually appear in each other’s “Related Forecasts” section. For example, “GBO-4 Biomes” will appear for “Arctic sea ice extent” and vice versa. Your biome forecast can depend on sea ice extent: presumably a loss of sea ice reduces that biome.