SciCasters:

Thank you for your participation over the past year and a half in the largest collaborative S&T forecasting project, ever. Our main IARPA funding has ended, and we were not able to finalize things with our (likely) new sponsor in time to keep the question-management, user support, engineering support, and prizes running uninterrupted. Therefore we will be suspending SciCast Predict for the summer, starting June 12, 2015 at 4 pm ET. We expect to resume in the Fall with the enthusiastic support of a big S&T sponsor. In the meantime, we will continue to update the blog, and provide links to leaderboard snapshots and important data.

Recap

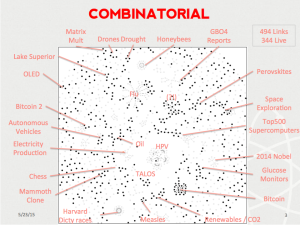

Through the course of this project, we’ve seen nearly 130,000 forecasts from thousands of forecasters on over 1,200 forecasting questions, and an average of >240 forecasts per day. We created a combinatorial engine robust enough to allow crowdsourced linking, resulting in the following rich domain structure:

Near-final question structure on SciCast, with most of the live links provided by users. (Click for full size)

Some project highlights:

- The market beat its own unweighted opinion pool (from Safe Mode) 7/10 times, by an average of 18% (measured by mean daily Brier score on a question)

- The overall market Brier was about 0.29

- The project was featured in The Wall Street Journal and Nature and many other places

- SciCast partnered with AAAS, IEEE, and the FUSE program to author more than 1,200 questions

- Project principals Charles Twardy and Robin Hanson answered questions in a Reddit Science AMA

- SciCasters weighed in on news movers & shakers like the Philae landing and Flight MH370

- SciCast held partner webinars with ACS and with TechCast Global

- SciCast hosted questions (and provided commentary) for the Dicty World Race

- In collaboration The Discovery Analytics Center at Virginia Tech and Healthmap.org, SciCast featured questions about the 2014-2015 flu season

- SciCast gave away BIG prizes for accuracy and combo edits

- Other researchers are using SciCast for analysis and research in the Bitcoin block size debate

-

MIT and ANU researchers studied SciCast accuracy and efficiency, and were unable to improve using stock machine learning — a testimony to our most active forecasters and their bots. [See links for Della Penna, Adjodah, and Pentland 2015, here.]

What’s Next?

Prizes for the combo edits contest will be sent out this week, and we will be sharing a blog post summarizing the project. Although SciCast.org will be closed, this blog will remain open as well as the user group. Watch for announcements regarding future SciCast.

Once again, thank you so much for your participation! We’re nothing without our crowd.

Contact

Please contact us at [email protected] if you have questions about the research project or want to talk about using SciCast in your own organization.

So Long, and Thanks for All the Fish

Users who have LIKED this post:

Q: How did you get involved as a SciCast participant?

Q: How did you get involved as a SciCast participant?