The SciCast 2015 Annual Report has been approved for public release. The report focuses on Y4 activities, but also includes a complete publication and presentation list for all four years. Please click “Download SciCast Final Report” to get the PDF. You may also be interested in the SciCast anonymized dataset.

Here are two paragraphs from the Executive Summary:

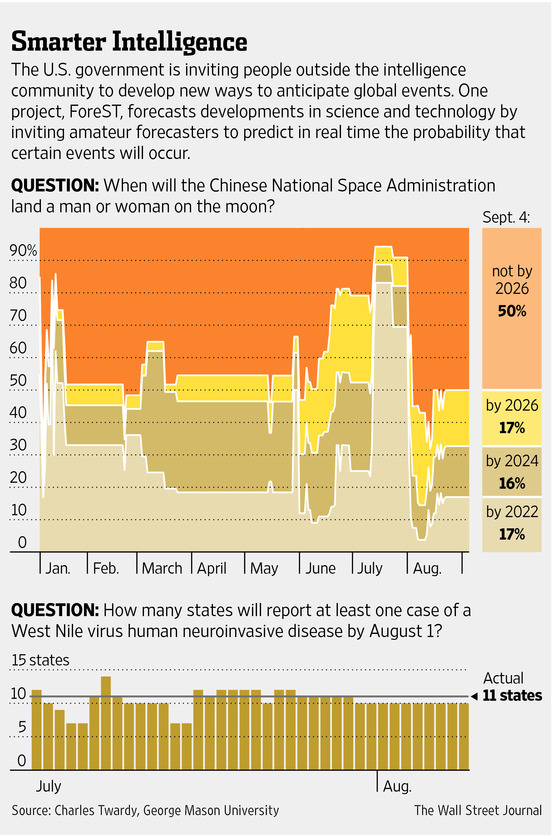

We report on the fourth and final year of a large project at George Mason University developing and testing combinatorial prediction markets for aggregating expertise. For the first two years, we developed and ran the DAGGRE project on geopolitical forecasting. On May 26, 2013, renamed ourselves SciCast, engaged Inkling Markets to redesign our website front-end and handle both outreach and question management, re-engineered the system architecture and refactored key methods to scale up by 10x – 100x, engaged Tuuyi to develop a recommender service to guide people through the large number of questions, and pursued several engineering and algorithm improvements including smaller and faster asset data structures, backup approximate inference, and an arc-pricing model and dynamic junction-tree recompilation that allowed users to create their own arcs. Inkling built a crowdsourced question writing platform called Spark. The SciCast public site (scicast.org) launched on November 30, 2013, and began substantial recruiting in early January, 2014.

As of May 22, 2015, SciCast has published 1,275 valid questions and created 494 links among 655 questions. Of these, 624 questions are open now, of which 344 are linked (see Figure 1). SciCast has an average Brier score of 0.267 overall (0.240 on binary questions), beating the uniform distribution 85% of the time, by about 48%. It is also 18-23% more accurate than the available baseline: an unweighted average of its own “Safe Mode” estimates, even though those estimates are informed by the market. It beats that ULinOP about 7/10 times.

You are welcome to cite this annual report. Please also cite our Collective Intelligence 2014 paper and/or our International Journal of Forecasting 2015 paper (if it gets published — under review now).

Sincerely,

Charles Twardy and the SciCast team

Users who have LIKED this post: