SciCast has been featured in a Wall Street Journal article about crowdsourced forecasting in the U.S. intelligence community. We’re excited to share that SciCast now has nearly 10,000 participants, a 50% increase in the last two months - an important achievement for a crowdsourced prediction site.

ForeST, led by George Mason University’s SciCast team, broke off from ACE in 2013 to focus specifically on developments in science and technology, and uses similar techniques. Tracking developments in these fields may help identify advances in weapons systems or emerging technologies in bioterrorism or cyberthreats.

“SciCast lets us measure the quality of our predictions and improve them,” said Charles Twardy, who leads the project.

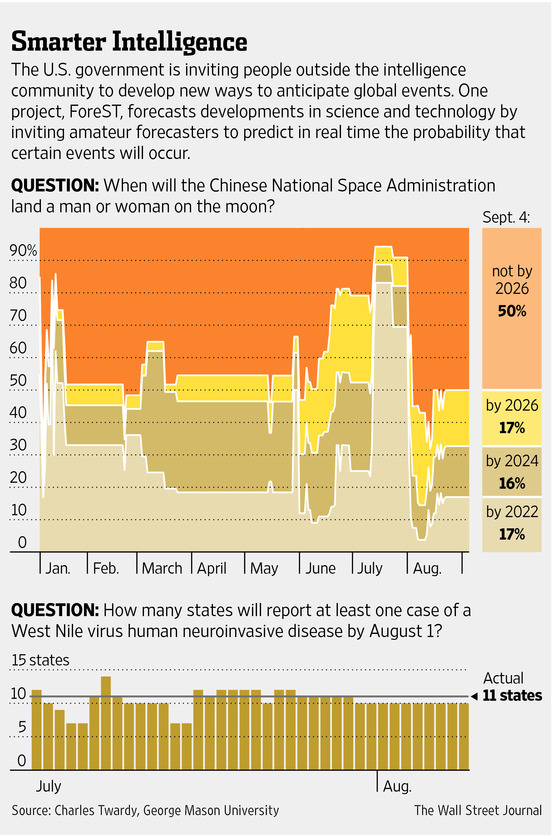

Among SciCast’s predictions this year was that between 10 and 12 states would report cases of West Nile virus by Aug. 1. Eleven did. And an open question seeks to predict when China will complete its first moonwalk.

Read the full article, here: “U.S. Intelligence Community Explores More Rigorous Ways to Forecast Events.”

Please pass along this post to friends and colleagues using a share button below.

“We’re excited to share that SciCast now has nearly 10,000 participants.” Seriously? Doesn’t it feel just a little silly to pretend to be “excited” about a complete fiction that bears no relation to any meaningful reality? Aren’t you really saying “we’re excited to share that we paid an Internet marketing company tons of money we could have spent building a useful GUI to create banner ads to bring in thousands of one-time visitors that actually made a forecast on one or maybe even two questions! And they didn’t even screw things up very much! As @ctwardy and @kenny have explained elsewhere, they barely had any effect and they gave our real forecasters tons of easy liquidity through just the few unimportant questions through which they were funneled!”

Seriously? Perhaps the claim of a 50% increase is legitimate, but it is more like an increase from 20 to 30 real users, maybe 30 to 45. I understand that you need the facade of thousands of participants for marketing purposes, and that some potential sponsors and customers may actually give it a wee bit of credence. But stating it on a blog for actual users? With faux “excitement?” I feel sorry for the author; I hope that SciCast pays her well.

Also, I see that the numbers in the graphic legend used in the WSJ article are completely messed up. It mistakenly indicates “16% by 2024″ and “17% by 2026,” when it should be “33% by 2024″ and 50% by 2026.” The numbers from the actual question buckets are incrementals in the graphic, not totals. But nobody in the WSJ article comments noticed or cared.

Press coverage is good. Much more promising than any ad campaign as a means of attracting people who might be genuinely interested and actually stick.

Press coverage is good, and we are very glad for our coverage in the WSJ, KurzweilAI, and other outlets over the last 8 months. Such coverage does have a better chance of finding active forecasters, but it’s occasional, and we are always looking for more participants to try the site. Crowd activity follows a power law. Even among massively popular support forums studied by my colleague Aditya Johri, most people only ever ask one question, and most of the replies come from a dedicated handful. So we may need to attract 1,000 people for every @Relax or @dvasya. Most will probably try the site a few times and then walk away, most of the rest will only make the occasional edit, and so on.

So of course 10K isn’t the number active, but yes because I have 10 fingers, I find 10,000 is unreasonably more exciting than 9,834. Also, beforehand I didn’t think banner ads would have any substantial effect, so it’s huge to know that we can get new people to try the site. Having 10K registered means there are now potentially ~1K people who will make an occasional forecast if we treat them right.

Training is an obvious gap — we’re working on new tutorials. Incentives matter — we are analyzing the previous incentives to better design a new campaign. Feedback matters — we just provided question-level estimates of gain/loss and we are working on much more. And community matters — we can provide threaded comments and recent-activity widgets, but ultimately that comes down to how all of you interact.

PS: We saw 302 unique forecasters in August (when ads were paused) — I’d like that to be ~1K, at least double the number of active questions.

PPS: The WSJ graphic is pretty clearly showing incrementals. Don’t forget your Grice!

I think you should look into @jkominek’s earlier suggestion to consider StackExchange models, if you haven’t already. While the stackexchange forums I’m familiar with (askubuntu, mathoverflow, math.stackexchange, …) do exhibit the “most answers come from a few people” effect, I think it’s less severe than with traditional support forums. The most important features are probably (1) reputation system, tied to quality of questions and answers, as determined by votes, which votes affect visibility, and (2) gamification (fancy badge system). These two interact well. Scicast has (2) but not (1). Reddit has (1) but not really (2). I think the latter shows that (1) is more important than (2), although (2) is fun. In Scicast’s case, reputation would have to be introduced as an *additional* “currency” on top of actual points, which might be confusing to new users, so that’s a bit of an issue. On the other hand, a weighted combination of reputation and user score could be a reasonable proxy for “trust”. This trust could then be used to vote to pause a question (for resolution or for rewriting), as discussed in other threads.

as much as I’d like to take credit, I think it was Charles himself who liked stackexchange already. (and while i like it to for what it does, too. i’m not really sure support is the issue here.)

(and while i like it to for what it does, too. i’m not really sure support is the issue here.)

@sflicht: Before launch we had actually worked out a whole ‘karma’ system for non-trading participation, but scrapped it because of complexity.

What about simply increasing the salience and impact of existing comment upvotes, with extra badges and perks?

-ctwardy

@sflicht: Before launch we had actually worked out a whole ‘karma’ system for non-trading participation, but scrapped it because of complexity.

What about simply increasing the salience and impact of existing comment upvotes, with extra badges and perks?

I could see it encouraging engagement.

Also I *like* the stacked line chart WSJ used, and I think it probably makes more sense as the default graphic for showing trading histories.

FWIW, I also *like* the stacked line chart from the WSJ. I don’t understand why @ctwardy would suggest that Grice would object to helpfully pointing out that the side captions were flawed and potentially confusing to an otherwise nice representation of the data. (Granted, the irritated rudeness in the previous comment obscured any validity in that criticism.)

@relax: Regarding Grice, I’d contend that the context including constrained space makes the sidebar clearly incremental, in the way that we might say, “12 people finished by noon, 5 by 1pm, and 2 more by 3pm.” On the other hand, if it was confusing to you, then my contention is false or at least not universal.

Certainly “Between 2022 and 2024″ might be more precise, but it wouldn’t fit in their nice sidebar. Open to suggestions that balance elegance & precision — we do want to redo the trend graphs.

The graphic is fine; just include the correct numbers: “33% by 2024″ and “50% by 2026.” Once you abandoned the wording (and meaning) of the actual SciCast “choices,” the original numbers (16% and 17%) no longer told the same story as the graphic. You should have just abandoned them and used the more-appropriate running totals. Elegance and precision are not in opposition here.

Pingback: Jonathan Gruber and the Wisdom of Crowds