Reposted with permission from SciCast forecaster Jay Kominek. You can find his blog, hypercomplex.net here.

I’m going to assume you’re familiar with SciCast; if you aren’t, that link is the place to start. Or maybe Wikipedia.

There has been a open question on SciCast, “Will Bluefin-21 locate the black box from Malaysian Airlines flight MH-370?”, since mid-April. (If you don’t know what MH370 is, I envy you.) It dropped fairly quickly to predicting that there was a 10% chance of Bluefin-21 locating MH370. Early on, that was reasonable enough. There was evidence pings from the black box had been detected in the region, so the entire Indian Ocean had been narrowed down to a relatively small area.

Unfortunately weeks passed and on May 29th Bluefin-21’s mission was completed, unsuccessfully. Bluefin-21 then stopped looking. At this point, I (and others) expected the forecast to plummet. But folks kept pushing it back up. In fact I count about 5 or 6 distinct individuals who moved the probability up after completion of the mission. There are perfectly good reasons related to the nature of the prediction market for some of those adjustments.

I’m interested in the bad reasons.

One of the bad reasons that could apply to any question, but seems to be a very likely candidate for this question, is being unaware of the state of the world. Forecasting the future when you don’t know the present just isn’t going to turn out very well. In the best case, you’ve got really good priors, and you’re stuck working off of those. Realistically, your priors aren’t that great. Evidence could help them out, but you’re not updating.

In the specific case of the Bluefin-21, it was suggested (by someone with whom I have no beef, and am not trying to single out!) that there was still a chance MH370 would be discovered by going back and reanalyzing the data.

This didn’t seem at all likely to me. I attempted to explain why, though in retrospect I think my explanation was poor, and incomplete.

To formalize my reasoning, I hunted around for a software tool that would let me crunch the numbers and make a diagram. I turned up GeNIe, which looked appropriate for the task. GeNIe is a GUI on top of SMILE, which is a software library for (hand wave) modeling Bayesian networks.

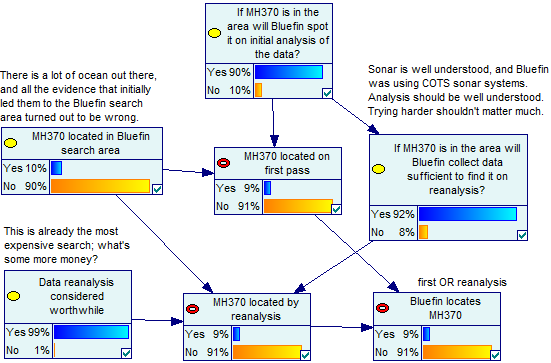

I ended up producing this model and looks like this:

(If you want to fiddle with the model, you can download it here.)

This model assumes Bluefin-21 is 90% effective on the first pass, and reanalysis has a 20% chance to find MH370 if it was missed. It also assumes that reanalysis will almost certainly happen, but that there is only a 10% chance that MH370 is even in the search area. I feel that’s generous given the search area, and nature of the acoustic evidence. (I never thought the surface debris was likely to be related. There’s a lot of crap in the ocean. That they found so little is just evidence of the size of the ocean.)

Based on all of that, the model says there’s a 9% chance Bluefin-21 would find MH370. Which is about where the SciCast prediction was at. The other forecasters might’ve been giving the pings more credit. Anyways, we’re kind of in the ballpark. So let’s now tell the model that Bluefin-21 didn’t find MH370 on the first pass, and see what it says:

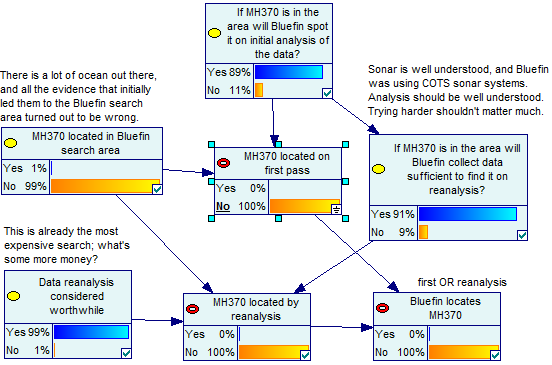

Weee, the chance that Bluefin-21 will find anything has now dropped to basically zero. And that’s because not finding the MH370 on the first pass is evidence that it isn’t there, even if Bluefin-21 is imperfect. In fact, the better Bluefin-21 is, the stronger the evidence of absence. For any chance of detection to remain, Bluefin-21 would have to be good at collecting data, but the analysis would have to be awful and error prone, unless you repeated it. I didn’t attempt to model that in, because it would be so weird.

First, sonar is well understood, and Bluefin-21 was using COTS units that basically produce a elevation model of the ocean floor. Second, if “trying harder” upped the odds of detection, then the operators would throw more CPU or man power at it until they’d raised the likelihood of detection as high as it was reasonably going to go. The cost of the search had hit at least $44 million before Bluefin-21 got wet; some additional data analysis isn’t going to put a dent in that. (Maybe you could find signal processing researcher to apply some cutting edge stuff, but if that didn’t happen at the start of the search, I don’t think it’ll happen before the question expires.)

This question is pretty much over, but if you have suggestions for making the model better, I’d be interested in hearing them. This is the first thing I’ve done with this sort of modeling tool. So I’m sure some things could be done better.

I plan to make further use of GeNIe for my SciCasting. For starters, the models make it easier to break apart the separate components of my belief. I can also tweak nodes and have the machine handle propagating the change, which will be much more accurate than fuzzily doing it in my head.

Using some of the fancier(?) features should also allow me to focus my forecasting efforts and points on the questions where I can get the best returns. (Basically by answering the obvious question of “Am I sure enough to make it worth it?”)

Finally, I’d like to put my models up like this, along with some explanation. That way I can demonstrate that I’m predicting the future on the basis of cunning and wit, not piles of luck. ☺

I think many of the forecasters don’t even read the questions. Otherwise I have no idea why they make the bets they do.

For example, the market currently says we have a 15% chance of finding life on Mars in the near future. WUT.

I mean, no rovers are even going to land on Mars in the next year. C’mon SciCasters.

I’m happy to see someone sharing thoughtful analysis on a SciCast question.