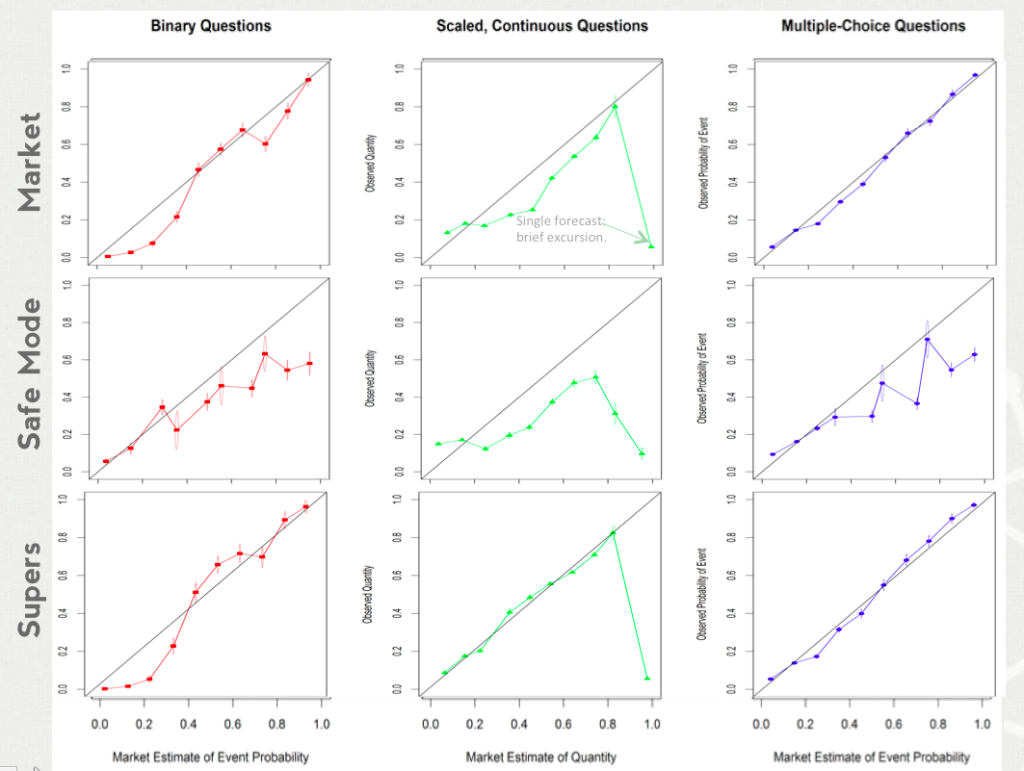

The market is better calibrated than we thought, but not perfect. In our previous calibration post, each question counted once. In the chart below, each forecast counts once, which is the usual method.

The main message is that the overall market is much better calibrated than raw estimates (safe mode). Comparing the top and bottom rows, the market is strongly driven by the super-forecasters (here the Top 70 by Brier score, but it doesn’t change much if we use market score or the Top 10).

The main message is that the overall market is much better calibrated than raw estimates (safe mode). Comparing the top and bottom rows, the market is strongly driven by the super-forecasters (here the Top 70 by Brier score, but it doesn’t change much if we use market score or the Top 10).

On Scaled questions, both the raw estimates and the market as a whole over-estimate. The supers, however, do exceptionally well. (The noted anomaly in the Scaled markets is a single forecaster who submitted a forecast at the right extreme and then quickly corrected it. However, forecasters in Safe Mode were much more likely to assert extreme values, as shown by the small error bars in the middle plot.)

The market shows perhaps a small favorite-longshot bias for multiple-choice questions and a strong longshot bias for binary questions. The dip around forecasts of 0.75 on binary questions that ruins the supers’ beautiful S-shape is caused by a high number of forecasts in the vicinity of 0.75 on two questions: “Will Google announce development of a smartwatch at or before the Google I/O 2014 Conference?” and “Will Google acquire Twitch by the end of September 2014?” We suspect that the better calibration on multiple-choice questions has to do with counting forecasts on all options when only one is directly provided by the user; the others are normalized to accommodate the one.

Users who have LIKED this post:

It’s a bit of a shame to have the methodology reflect “mistake” edits like the one in the middle column. I’m sort of curious what happens when you bucket time into (say) 30 minute intervals and compute the calibration of the “pseudo-forecasts” made by comparing the market snapshots at the beginning and end of each interval.

Users who have LIKED this comment:

We’ll look at variations — in fact we have a couple in an experimental “Executive Dashboard” that hasn’t been fully vetted. On the other hand, I’m happy to see we’re doing this well “warts and all”. OK, so warts removed: this plot includes our usual “de-stuttering” rule that collapses an uninterrupted string of edits by the same person on the same target+assumptions into a single edit. But in the anomaly above, someone else got a small correction in before the original forecaster reversed the mistake.

Anyone have a real word to use in place of “de-stuttering”?

I have a question about the algorithm used to determine the points allocated to a winner.

Assume that (1) I know the “correct” expected value of a question, (2) other predictors will move any bet I make back towards a different (and constant) EV indefinitely, (3) that I want to invest a fixed number of points in this question.

How do I maximize my expected winnings? Do I make a few large bets in Power Mode to reset the EV to my exact EV, or do I make many smaller bets with predictions between my “true” EV and the rest of the market’s guess?

Thanks for any insight!

@Robert: Per Robin Hanson - You gain by making trades that move the price closer to the right answer. However, the closer the price is to the right answer, the less you gain from each point you spend. So if you expect people to counter your moves toward the right answer, you’d prefer to do that moving farther away from the right answer, rather than closer. Best to move it a bit that way, let other people move it back, move a bit again, and so on.

Yeah, lots of smaller bets away from the truth. It’s an exploitative strategy.

I’m not at all sure I understand how to interpret these, but if we assume for a moment that the super users and safe mode users are completely disjoint, could it be possible that there’s a connection between the right hand side of the safe mode graphs (where they appear to be overconfident that things will occur?) and the left hand side of the super user graphs (where they appear reluctant to say things will not occur?) ?

(fwiw, that’s three questions total.)

i’m going to assume i’m in the super user group, and point out that my program will give up on fighting the overconfidence of others after awhile; i might know the right answer, and be fairly confident, but i won’t pour in the same kinds of points that safe mode users (who aren’t even aware of the points they’re tying up!) are willing to pour in. i think that the other super users might have similar behaviors, even if it isn’t as explicit.

Safe-mode forecasts can come from anyone for the purpose of these graphs. Although super users prefer power mode to safe mode, they make most of the forecasts and still occasionally use safe mode, so about half of the safe-mode forecasts are from super users.

@jkominek: Can you expand on your Safe Mode comments? It’s actually quite hard to pour lots of points in Safe Mode. Safe Mode never uses more than 1% of someone’s Available points on a single edit. That’s 50 points for a new user, enough to move a 50% forecast to 65%. (When we offered repeating trades, Safe Mode would continue to assert your opinion for a week, but that’s been discontinued for awhile now. )

Sure, 50 points if they trade once. I’m pretty sure I’ve seen, on more than a few occasions, someone sitting there and safe mode’ing over and over until they got the result they wanted. (apparently they didn’t notice power mode?) And of course persistent users can and have accomplished the same effect as the repeating trades manually.

I think of “pouring points” into a question as consistently trading in the same direction without an awareness of the total points that you’re tying up in a single question. Safe mode obscures the fact that you’ve got a limited supply of points you can use for trading, so you can keep “pouring” till you get bored of the site, or run out of points. I’m guessing that/wondering if super users are more reluctant to “pour” in points, so they’re not fully able to balance out the points “poured” in by safe mode users. Leaving us with the observed complementary(?) distortions between the two groups. Maybe?

It may just be that there are a lot more casual / new users, but I’d be interested to see how often forecasters reiterate opinions in safe mode. Supers are more active, and so I assume much more likely to use up their Available (I have to check on this). If you are resource-limited, you should exhibit a favorite/longshot bias. Whether the market should is another question.

I looked into this a little on the Google Group. Discussion is here: https://groups.google.com/forum/#!topic/scicasters/Jbc06ubKmD0

I’m not too surprised that the super forecasters lose accuracy at the extremes. The super forecasters are sometimes liquidity-constrained, and that’s why you see questions like “Is there life on Mars?” trading at 5% or so.

Users who have LIKED this comment:

Yeah, it would be nice if instead of *all* trades being counted, only the trades made directly before another user’s trade counted.

A trade from 50% -> 10% should be equivalent to a trade from 50% -> 30% -> 10% or a trade from 50% -> 70% -> 10%, as long as these are made in one burst by one user.

Users who have LIKED this comment:

@Ted: for analysis we already collapse an uninterrupted series of edits by the same user on the same assumption-question combination into a single edit. But in this case someone interrupted.