The final SciCast annual report has been released! See the “About” or “Project Data” menus above, or go directly to the SciCast Final Report download page.

Exeutive Summary (excerpts)

Registration and Activity

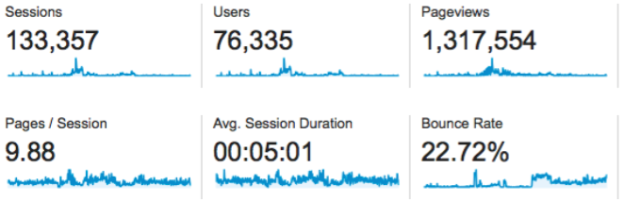

SciCast has seen over 11,000 registrations, and over 129,000 forecasts. Google Analytics reports over 76K unique IP addresses (suggesting 8 per registered user), and 1.3M pageviews. The average session duration was 5 minutes.

As of May 22, 2015, SciCast has published 1,275 valid questions and created 494 links among 655 questions. Of these, 624 questions remained open on May 22, 2015, of which 344 are linked.

SciCast has an average Brier score of 0.267 overall (0.240 on binary questions), beating the uniform distribution 85% of the time, by about 48%. It is also 18-23% more accurate than the available baseline: an unweighted average of its own “Safe Mode” estimates, even though those estimates are informed by the market. It beats that ULinOP about 7/10 times.

Y4 Research and Development

One of the main contributions in Year 4 (Y4, or contract Y4) was a set of incentives studies. The first pair of studies was planned at the end of Y3 and ran at the beginning of Y4. In the first randomized controlled 4-week trial, it showed that activity incentives strongly affect activity, without hurting accuracy. The second 4-week trial was modified to also test for accuracy incentives, but was too small and too weak to detect an effect. Therefore we designed a four-month matched-question randomized controlled trial using over 300 questions and over 100 actual final resolutions. This study showed that within the experiment, questions in their award- eligible state (e.g. Dec and Feb) were three times as active as those in their award-ineligible state (e.g. Jan and Mar), with fifteen times as much information per edit, for an average gain from incentives of forty times the information. Furthermore, the customary mean-of-mean daily Brier scores (MMDB) for experiment questions was better than for non-experiment questions and better than averages from before the experiment. The out-of- experiment questions were all longer-term questions, and did not get much traffic. But when they did, it tended to be very informative.) The final study examined the effect of incentivizing conditional forecasts while paying for (expected) accuracy: it created more conditional edits, more links, and more mutual information in the joint distribution.

We also developed an executive dashboard (Figure 2) that allows market administrators and clients to see market activity, accuracy, and calibration on one screen, and filter or “drill down” interactively.

SciCast also did a lot of work under the hood, notably developing a novel approach to approximate inference suited to prediction markets, and analyzed a tree-based data-structure for ordered questions of large (and potentially unbounded) arity. We deployed user-added arcs, and saw as many as 60 new arcs in a single day. Prior to that, we reorganized the combo interface so that assumptions are always salient. We have added the ability to remove links, to measure link strength via mutual information, and to find the exact network complexity a new arc will require (or will release if removed). Many of these features are enabled by this year’s dynamic junction-tree compilation algorithm, which dramatically speeds queries requiring the addition or reduction of a single arc.

Concluding Remarks

It has been quite an adventure. We are proud of our substantial accomplishments, and especially thankful for those who made it possible, notably our sponsor and our forecasters. We particularly thank our sponsor for encouring publication and distribution. We particularly thank those dedicate forecasters who gave substantial portions of their free time, whether in pursuit of prizes or not, and in particular those who offered comments, suggestions, and critiques. We didn’t always accept the suggestions, but we read them all, acted on many, and improved because of them.

The research project is officially over, but the system may appear again with a new sponsor. Regardless, the data is available for anyone to use, though we ask that you cite us, and ideally write a guest post discussing (and promoting) your findings. We hope periodically to update this blog with our own insights and analyses.

To the future!

-Charles

Users who have LIKED this post: