The question, Will the FDA approve a new small molecule antibiotic before 1 January 2016? has resolved as Yes, with a Brier score of 0.30, better than the baseline 0.50. Did you make a forecast? If so, login and check your dashboard. For more information, view this post on the FDA web site.

Analysis of the Predictions

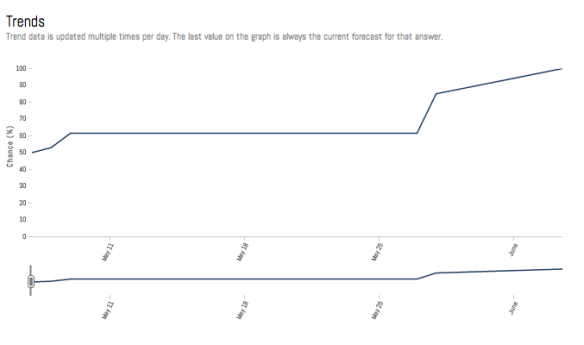

The Brier score for a uniform distribution forecast “UBS” was 0.50. Our raw market Brier Score1 (before smoothing or adjustments) was 0.30.

The Brier Score (Brier 1950) is a measurement of the accuracy of probabilistic predictions. As a distance metric, a lower score is better than a higher score. The market Brier Score ranges from 0 to 2, and is the sum of the squared differences between the individual forecasts and the outcome weighted by how long the forecasts were on the market. On a binary (Yes/No) question, simply guessing 50% all the time yields a “no courage” score of 0.5.

There were 5 forecasts made by 3 unique users.

There were 2 participants’ comments. Here is one:

Attention Forecasters! This question has resolved “Yes” as the FDA recently approved Dalvance as an antibacterial drug to treat adults with skin infections. Thanks to @PrieurDeLaCoteD’Or for alerting administrators! Official record here.

This related question also closed with the same answer: Which small molecule antibiotic will the FDA approve first?

The Brier score for a uniform distribution forecast “UBS” was 0.94. Our raw market Brier Score (before smoothing or adjustments) was 0.85.

There were 14 forecasts by 2 unique users. See the comments here.

Check out these related forecasting questions that are still active on the market.

Did you like this post? Follow us on Twitter.

1. The Ordered Brier is officially known as RPS.

Not much activity here — glad the initial forecaster put 60+% on the right outcome.

How do you choose the questions to do write-ups on? It seems like the ones with higher forecast / comment counts would be a bit more interesting. (Or at least, they might attract some of the forecasters over here to discuss the aftermath?)

We agree… But watch for posts on each of the closed questions. We would love to see you discuss the aftermath!

I would love to see a blog post regarding the philosophy behind the four tree pollen questions and the rationale behind setting them up the way you did. In particular, why you defined rules of resolution that were severely removed from the questions (how many high/very-high pollen days each city experienced during May). Also, why forecasting remained open for a full week beyond the end of the month, inviting all sorts of ridiculous “forecasting.”

p.s. Please resolve these as soon as possible after they close. Many people have way too much liquidity tied up in this prolonged mess.